When using OpenAI GPT via API, they charge us based on the number of tokens we send/receive.

A token isn't necessarily a word. Depending on your typing language, one word can be 2 or more tokens.

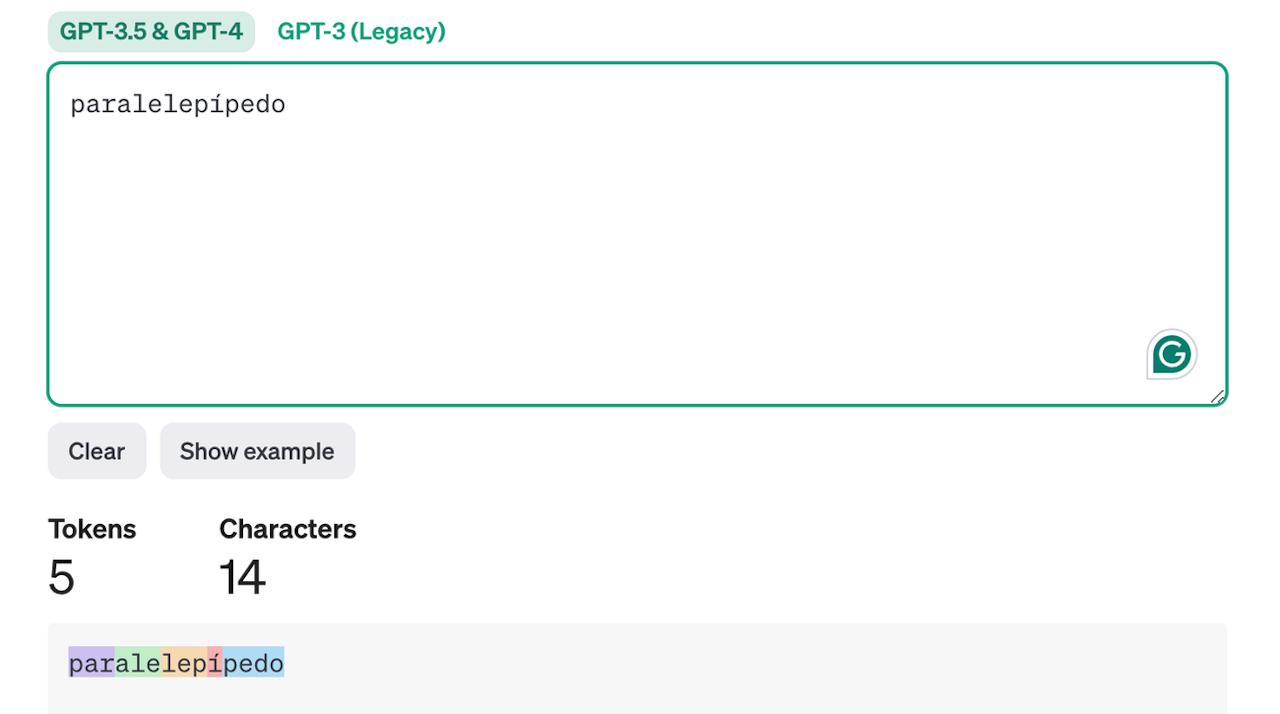

For example, the Portuguese word for "paving stone" is "paralelepípedo". In Portuguese, it's a single word, but while decoding, it becomes 5 tokens:

OpenAI provides a token counter on its website, so with the number of tokens vs. how much they charge per token in each model, you can estimate how much it would cost to send your prompt.

But what if you want to implement this feature in your application?

On the Tokenizer page, they mention they have a lib in Python that does this calculation: https://github.com/openai/tiktoken.

Luckily, our community is huge, and a dev forked this library and created a JS lib that provides a WASM (WebAssembly) binding from the original Python lib in JS.

https://github.com/dqbd/tiktoken

With that library, we can finally programmatically count the tokens:

After installing the lib tiktoken, you can import the encoding_for_model method and pass which model you want to use:

import { encoding_for_model } from "tiktoken";

const gpt4Enc = encoding_for_model("gpt-4-0125-preview");Now, you can pass your string to the encoder:

import { encoding_for_model } from "tiktoken";

const gpt4Enc = encoding_for_model("gpt-4-0125-preview");

const text = "paralelepípedo";

console.log("Encoding text with gpt-4-0125-preview model");

console.log("Text:", text);

const encoded = gpt4Enc.encode(text);

console.log("number of tokens:", encoded.length);

console.log("characters:", text.length);

console.log(encoded);

gpt4Enc.free();This will return a Uint32Array where the number of elements represents the number of tokens:

Encoding text with gpt-4-0125-preview model

Text: paralelepípedo

number of tokens: 5

characters: 14

Uint32Array(5) [ 1768, 1604, 64067, 2483, 53594 ]Which is exactly what we had on the website.

For long-running session apps, you need to ensure to "free" the encoder.

Caveats

Remember when I said this is a WASM? That means you can't simply "add and start using it". That's because we need to include both the JS and the .wasm files during our app bundle.

It also means not all js runtime/frameworks support it. In the lib docs, they mention a table of compatibility and how to use it with various frameworks.

Alternatively, you can use a pure JS implementation of the tiktoken called js-tiktoken, so you don't have to deal with WASM.